See the Ethics and Data Statement

WikiArt Emotions Dataset

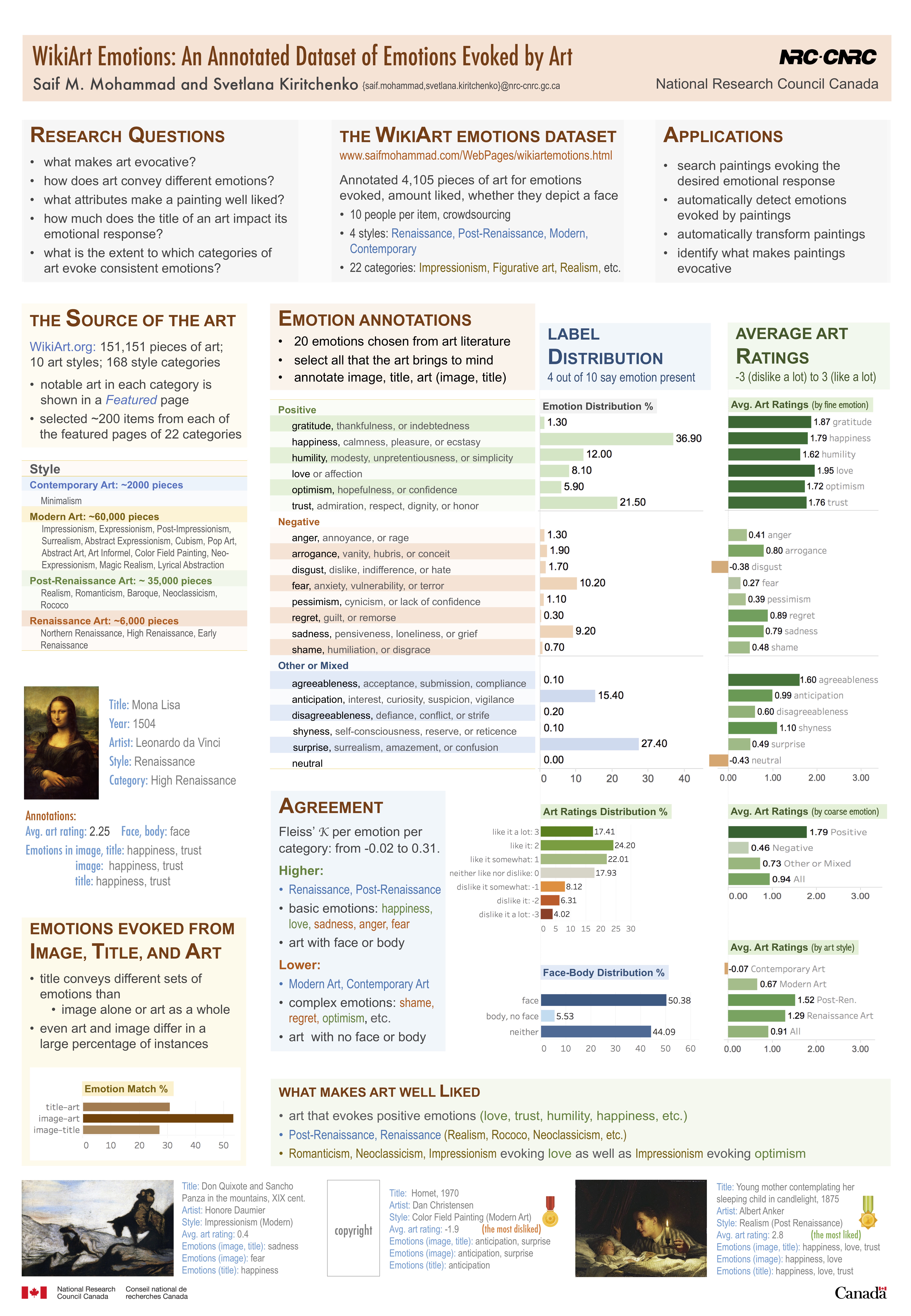

WikiArt Emotions is a dataset of 4,105 pieces of art (mostly paintings) that has annotations for emotions evoked in the observer. The pieces of art were selected from WikiArt.org's collection for twenty-two categories (impressionism, realism, etc.) from four western styles (Renaissance Art, Post-Renaissance Art, Modern Art, and Contemporary Art). WikiArt.org shows notable art in each category in a Featured page. We selected ~200 items from the featured page of each category. The art is annotated via crowdsourcing for one or more of twenty emotion categories (including neutral). In addition to emotions, the art is also annotated for whether it includes the depiction of a face and how much the observers like the art. We do not redistribute the art (images), we provide only the annotations.

This study has been approved by the NRC Research Ethics Board (NRC-REB) under protocol number 2017-98. REB review seeks to ensure that research projects involving humans as participants meet Canadian standards of ethics.

Annotations

Each piece of art was annotated by at least 10 annotators. The annotations include:

- all emotions that the image of art brings to mind;

- all emotions that the title of art brings to mind;

- all emotions that the art as a whole (title and image) brings to mind;

- a rating on a scale from -3 (dislike it a lot) to 3 (like it a lot);

- whether the image shows the face or the body of at least one person or animal;

- whether the art is a painting or something else (e.g., sculpture).

We asked annotators to identify the emotions that the art evokes in three scenarios:

- Scenario I: we present only the image (no title), and ask the annotator to identify the emotions it evokes;

- Scenario II: we present only the title of the art (no image), and ask the annotator to identify the emotions it evokes;

- Scenario III: we present both the title and the image of the art, and ask the annotator to identify the emotions that the art as a whole evokes.

Paper:

WikiArt Emotions: An Annotated Dataset of Emotions Evoked by Art. Saif M. Mohammad and Svetlana Kiritchenko. In Proceedings of the 11th Edition of the Language Resources and Evaluation Conference (LREC-2018), May 2018, Miyazaki, Japan.

Paper (pdf) BibTeX Poster

Terms of Use:

- If you use this dataset, cite the paper below:

WikiArt Emotions: An Annotated Dataset of Emotions Evoked by Art. Saif M. Mohammad and Svetlana Kiritchenko. In Proceedings of the 11th Edition of the Language Resources and Evaluation Conference (LREC-2018), May 2018, Miyazaki, Japan.

Paper (pdf) BibTeX Poster

- Do not redistribute the data. Direct interested parties to this page: http://saifmohammad.com/WebPages/wikiartemotions.html

- National Research Council Canada (NRC) disclaims any responsibility for the use of the dataset and does not provide technical support. However, the contact listed above will be happy to respond to queries and clarifications.

WikiArt Emotions: Data and Ethics Statement

Saif M. Mohammad and Svetlana KiritchenkoNational Research Council Canada

Project Homepage: http://saifmohammad.com/WebPages/wikiartemotions.html

Paper

WikiArt Emotions: An Annotated Dataset of Emotions Evoked by Art. Saif M. Mohammad and Svetlana Kiritchenko. In Proceedings of the 11th Edition of the Language Resources and Evaluation Conference (LREC-2018), May 2018, Miyazaki, Japan.

Paper (pdf) BibTeX Poster

Introduction and Curation Rationale

Art is imaginative human creation meant to be appreciated, make people think, and evoke an emotional response. Here for the first time, we create a dataset of more than 4,000 pieces of art (mostly paintings) that has annotations for emotions evoked in the observer. The pieces of art are selected from WikiArt.org’s collection for four western styles (Renaissance Art, Post-Renaissance Art, Modern Art, and Contemporary Art). The art is annotated via crowdsourcing for one or more of twenty emotion categories (including neutral). In addition to emotions, the art is also annotated for whether it includes the depiction of a face and how much the observers like the art. The dataset, which we refer to as the WikiArt Emotions Dataset, can help answer several compelling questions, such as: what makes art evocative, how does art convey different emotions, what attributes of a painting make it well liked, what combinations of categories and emotions evoke strong emotional response, how much does the title of an art impact its emotional response, and what is the extent to which different categories of art evoke consistent emotions in people.

One can label art for emotions from many perspectives: what emotion is the painter trying to convey, what emotion is felt by the observer (the person viewing the painting), what emotion is felt by the entities depicted in the painting, how does the observer feel towards entities depicted in the painting, etc. All of these are worthy annotations to pursue. However, in this work we focus on the emotions evoked in the observer (the annotator) by the painting. That decided, it is still worth explicitly articulating what it means for a painting to evoke an emotion, as here too, many different interpretations exist. Should one label a painting with sadness if it depicts an entity in an unhappy situation, but the observer does not feel sadness on seeing the painting? How should one label an art depicting and evoking many different emotions, for example, a scene of an angry mother elephant defending her calf from a predator? And so on. For this annotation project we chose to instruct annotators to label all emotions that the painting brings to mind. Our exact instructions in this regard are shown below:

Art is imaginative human creation meant to be appreciated and evoke an emotional response. We will show you pieces of art, mostly paintings, one at a time. Your task is to identify the emotions that the art evokes, that is, all emotions that the art brings to mind.

Which Emotions Apply Frequently to Art

Humans are capable of experiencing hundreds of emotions and it is likely that all of them can be evoked from paintings. However, some emotions are more frequent than others and come more easily to mind. Further, different individual experiences may prime different people to easily recall different sets of emotions. Also, emotion boundaries are fuzzy and some emotion pairs are more similar than others. All of this means that an open-ended question asking annotators to enter the emotions evoked through a text box is suboptimal. Thus we chose to provide a set of options (each corresponding to a closely related emotions set) and asked annotators to check all emotions that apply. We chose the options from these sources:

-

The psychology literature on basic emotions (Ekman, 1992; Plutchik, 1980; Parrot, 2001).

-

The psychology literature on emotions elicited by art (Silvia, 2005; Silvia, 2009; Millis, 2001; Noy and Noy-Sharav, 2013).

-

Our own annotations of the WikiArt paintings in a small pilot effort.

We grouped similar emotions into a single option. The final result was 19 options of closely-related emotion sets and a final neutral option. The options were arranged in three sets ‘positive’, ‘negative’, and ‘mixed or other’ as shown in Table 3 in the paper, to facilitate ease of annotation. A text box was also provided for the annotators to capture any additional emotions that were not part of the pre-defined set of the 19 options. Many extra emotions were entered by the annotators, including uncertainty, amusement, and jealousy. However, none of the proposed additional emotions was used more than 20 times overall, which indicates that the predefined set of the 19 emotions we provided covered the art emotion space well.

Annotation

We annotated all of our data by crowdsourcing. Links to the art and the annotation questionnaires were uploaded on the crowdsourcing platform, CrowdFlower (now Appen). All annotators for our tasks had already agreed to the CrowdFlower terms of agreement. They chose to do our task among the hundreds available, based on interest and compensation provided. Respondents were free to annotate as many instances as they wished to. The annotation task was approved by the National Research Council Canada’s Institutional Review Board, which reviewed the proposed methods to ensure that they were ethical. Special attention was paid to obtaining informed consent and protecting participant anonymity.

Art Variety

As of January 2018, WikiArt.org had 151,151 pieces of art (mostly paintings) corresponding to ten main art styles and 168 style categories. See Table 1 in the paper for details. The art is also independently classified into 54 genres. Portrait, landscape, genre painting, abstract and religious painting are the genres with the most items. The art can be in one of 183 different media. Oil, canvas, paper, watercolor, and panel are the most common media. For each piece of art, the website provides the title, the image, the name of the artist, the year in which the piece of art was created, the style, the genre, and the medium. Figure 1 (in the paper) shows the page WikiArt.org provides for the Mona Lisa. We collected the URLs and the meta-information for all of these pieces of art and stored them in a simple, easy to process file format. The data is made freely available via our WikiArt Emotions project webpage for non-commercial research and art- or education-related purposes.

For our human annotation work, we chose about 200 paintings each from twenty-two categories (4,105 paintings in total). The categories chosen were the most populous ones (categories with more than 1000 paintings) from four styles: Modern Art, Post-Renaissance Art, Renaissance Art, and Contemporary Art. For each chosen category, we selected up to 200 paintings displayed on WikiArt.org’s ‘Featured’ tab for that category. (WikiArt selects certain paintings from each category to feature more prominently on its website. These are particularly significant pieces of art.) Table 2 (in the paper) summarizes these details.

Annotator Demographic

No direct annotator demographic information is available. We annotated all of our data by crowdsourcing. Links to the art and the annotation questionnaires were uploaded on the crowdsourcing platform, CrowdFlower. So annotator demographics will be similar to the general pool of annotators on CrowdFlower.

On the CrowdFlower task settings, we specified that we needed annotations from ten people for each instance. However, because of the way the gold instances are set up, they are annotated by more than ten people. The median number of annotations is still ten. In all, 308 people annotated between 20 and 1,525 pieces of art. A total of 41,985 sets of responses (for Q1–Q5) were obtained for the 4,105 pieces of art.

Funding

Data annotation was funded by National Research Council Canada (NRC).

Ethics Review

This study has been approved by the NRC Research Ethics Board (NRC-REB) under protocol number 2017-98. REB review seeks to ensure that research projects involving humans as participants meet Canadian standards of ethics.

Ethical Considerations

a. Coverage: The art pieces included for annotation are not a random or representative sample of art in general.

b. Not Immutable: The emotion labels for the art do not indicate an inherent unchangeable attribute. Emotion associations can change with time, but the entries here are largely fixed. They pertain to the time they are created and to the people who annotated them.

c. Perceptions of emotion associations (not “right” or “correct” associations): Our goal here was to determine broad trends in how people perceive the art. These are not meant to be the “right” or “correct” emotions evoked by the art. The individual labels are not meant to represent the perceptions of people at large.

d. Socio-Cultural Biases: Emotion annotations of art are not expected to be highly consistent across people for a number of reasons, including: differences in human experience that impact how they perceive art, the subtle ways in which art can express affect, and fuzzy boundaries of affect categories. With the annotations on the WikiArts Emotion dataset, we can now determine the extent to which this agreement exists across different emotions, and how the agreements are impacted by attributes of the painting such as style, style category, and depictions of faces.

e. Limitations of Aggregation by Majority Vote: For each item (image, title, or art), we will refer to the emotion that receives the majority of the votes from the annotators as the predominant emotion. In case of ties, all emotions with the majority vote are considered the predominant emotions. When aggregating the responses to obtain the full set of emotion labels for an item, we wanted to include not just the predominant emotion, but all others that apply, even if their presence is more subtle. Thus, we chose a somewhat generous aggregation criteria: if at least 40% of the responses (four out of ten people) indicate that a certain emotion applies, then that label is chosen. We will refer to this as Ag4 dataset. 929 images, 1332 titles, and 823 paintings did not receive sufficient votes to be labeled with any emotion. These items were set aside. The rest of the items and their emotion labels can be used to train and test machine learning algorithms to predict the emotions evoked by art.

We also created two other versions of the labeled dataset by using an aggregation threshold of 30% and 50%, respectively. (If at least 30%/50% of the responses (three/five out of ten people) indicate that a certain emotion applies, then that label is chosen.)

As noted above, different socio-cultural groups can perceive art differently, and taking the majority vote can have the effect of only considering the perceptions of the majority group. When these views are crystalized in the form of a dataset, it can lead to the false perception that the norms so captured are “standard” or “correct”, whereas other associations are “non-standard” or “incorrect”. Thus, it is worth explicitly disavowing that view and stating that the dataset simply captures the perceptions of the majority group among the annotators.

f. Source of Errors: Even though the researchers take several measures to ensure high-quality and reliable data annotation (e.g., multiple annotators, clear and concise questionnaires, etc.), human error can never be fully eliminated in large-scale annotations. Expect a small number of clearly wrong entries.

g. Inappropriate Use Cases: This dataset was created with a specific research goal as discussed in the beginning of this document. Use of the dataset for commercial applications, drawing inferences about individuals, and building machine learning systems deployed in settings where the data is very different are not recommended. Do not use the dataset to automatically detect emotions of people from their facial expressions. Feel free to contact the authors for specific use-case scenarios.

Contact: saif.mohammad@nrc-cnrc.gc.ca